Step 1: Install Dependencies

First, ensure you have Python 3 installed on your system. Then, install the necessary dependencies using pip:

bashpip3 install requests beautifulsoup4

Step 2: Fetch Web Pages

Use the requests library to fetch the HTML content of the web pages you want to scrape.

pythonimport requests

def fetch_page(url):

try:

response = requests.get(url)

return response.text

except requests.RequestException as e:

print('Error fetching page:', e)

return None

Step 3: Parse HTML Content

Use BeautifulSoup to parse the HTML content and extract the data you need.

pythonfrom bs4 import BeautifulSoup

def parse_page(html):

soup = BeautifulSoup(html, 'html.parser')

# Example: Extracting titles of articles

titles = [title.text for title in soup.find_all('h2')]

return titles

Step 4: Handle Pagination

If the data you want to scrape is spread across multiple pages, you’ll need to handle pagination.

Step 5: Error Handling and Retries

Implement error handling to handle cases where fetching or parsing fails. You can also implement retry logic to retry failed requests.

Step 6: Writing Data to Files or Databases

Once you’ve scraped the data, you can write it to files (e.g., JSON, CSV) or store it in a database for further analysis.

Step 7: Throttling and Rate Limiting

To avoid overwhelming the server or getting blocked, implement throttling and rate limiting to control the frequency of your requests.

Step 8: User-Agent Rotation and Proxy Support

To avoid being detected as a bot, rotate User-Agent headers and use proxies to mimic human behavior.

Step 9: Testing and Maintenance

Test your web scraper thoroughly and regularly update it to handle changes in the website’s structure or behavior.

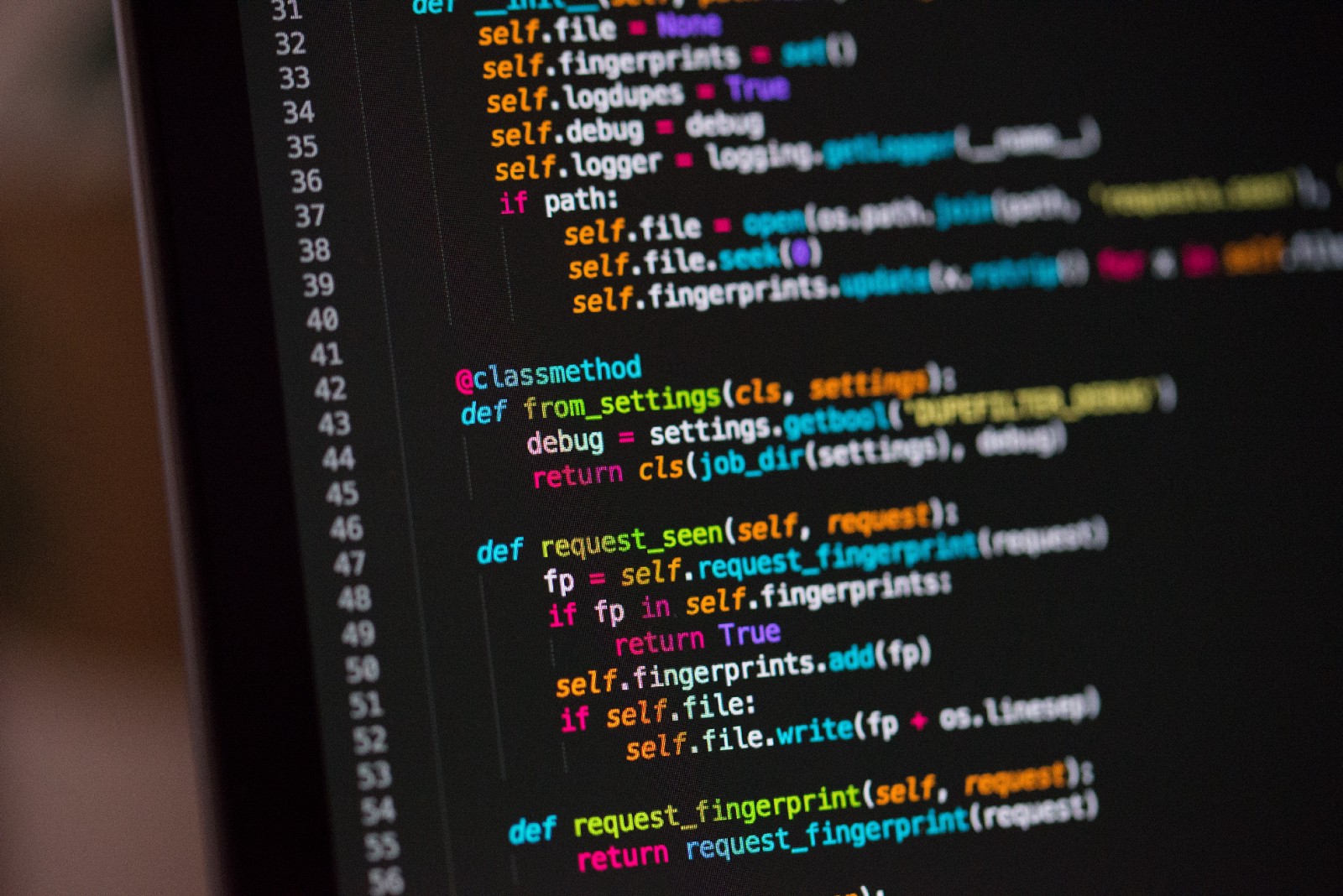

Example:

Here’s a simplified example of an advanced web scraper that fetches and parses a list of articles from a website:

pythonimport requests

from bs4 import BeautifulSoup

def fetch_page(url):

try:

response = requests.get(url)

return response.text

except requests.RequestException as e:

print('Error fetching page:', e)

return None

def parse_page(html):

soup = BeautifulSoup(html, 'html.parser')

titles = [title.text for title in soup.find_all('h2')]

return titles

def main():

url = 'https://example.com/articles'

html = fetch_page(url)

if html:

articles = parse_page(html)

print('Scraped articles:', articles)

if __name__ == '__main__':

main()

Conclusion:

Creating an advanced web scraper with Python 3 involves similar considerations as with Python 2, including handling dynamic content, implementing pagination, error handling, and more. Make sure to review the terms of service of the websites you’re scraping and follow best practices to avoid legal issues and ensure the longevity of your scraper.

Leave a Reply